Montgomery's pair correlation conjecture

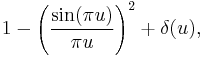

In mathematics, Montgomery's pair correlation conjecture is a conjecture made by Hugh Montgomery (1973) that the pair correlation between pairs of zeros of the Riemann zeta function (normalized to have unit average spacing) is

which, as Freeman Dyson pointed out to him, is the same as the pair correlation function of random Hermitian matrices. Informally, this means that the chance of finding a zero in a very short interval of length 2πL/log(T) at a distance 2πu/log(T) from a zero 1/2+iT is about L times the expression above. (The factor 2π/log(T) is a normalization factor that can be thought of informally as the average spacing between zeros with imaginary part about T.) Andrew Odlyzko (1987) showed that the conjecture was supported by large-scale computer calculations of the zeros. The conjecture has been extended to correlations of more than 2 zeros, and also to zeta functions of automorphic representations (Rudnick & Sarnak 1996).

Montgomery was studying the Fourier transform F(x) of the pair correlation function, and showed (assuming the Riemann hypothesis) that it was equal to |x| for |x|<1. His methods were unable to determine it for |x|≥1, but he conjectured that it was equal to 1 for these x, which implies that the pair correlation function is as above.

References

- Katz, Nicholas M.; Sarnak, Peter (1999), "Zeroes of zeta functions and symmetry", American Mathematical Society. Bulletin. New Series 36 (1): 1–26, doi:10.1090/S0273-0979-99-00766-1, ISSN 0002-9904, MR1640151, http://www.ams.org/bull/1999-36-01/S0273-0979-99-00766-1/home.html

- Montgomery, Hugh L. (1973), "The pair correlation of zeros of the zeta function", Analytic number theory, Proc. Sympos. Pure Math., XXIV, Providence, R.I.: American Mathematical Society, pp. 181–193, MR0337821

- Odlyzko, A. M. (1987), "On the distribution of spacings between zeros of the zeta function", Mathematics of Computation (American Mathematical Society) 48 (177): 273–308, doi:10.2307/2007890, ISSN 0025-5718, JSTOR 2007890, MR866115

- Rudnick, Zeév; Sarnak, Peter (1996), "Zeros of principal L-functions and random matrix theory", Duke Mathematical Journal 81 (2): 269–322, doi:10.1215/S0012-7094-96-08115-6, ISSN 0012-7094, MR1395406, http://projecteuclid.org/euclid.dmj/1077245671